Generative AI seems to be having something of a moment. Large Language models have really captured the public imagination due to ChatGPT’s ability to give plausible responses across a wide range of knowledge domains. The responses seem fast, well-structured, and often on point. To the casual observer, this can feel almost sentient.

Scratch beneath the surface and the results can start to feel less impressive. The responses are consistently formulaic in both structure and style. Large language models can’t do reasoning or understand intent. All they really do is work out which word to put next based on a model trained against vast amounts of text. They can’t solve puzzles, do mathematics, or discern new knowledge.

Large language models may provide confident answers, but they will happily reproduce misinformation from any of their sources and not make any effort to weigh up different perspectives. The responses lack an authentic voice and even have a tendency to fabricate references. These models could be described as consummate bullshit artists.

A tipping point

Despite these apparent shortcomings, large language models might have arrived at a “Spinning Jenny” moment where they have the potential to transform how we approach creative tasks such as writing reports and cutting code. The problem is that our ability to understand the proper context and potential may be distorted by an unhelpful explosion of hype.

Vendors are falling over themselves to add an “AI” tag to their product names, much in the same way that the “e-” prefix was over-used during the first internet boom. There has been a Cambrian explosion of new tools promising to change the way we communicate, collaborate, and create. Commentators who haven’t heard of the luddite fallacy are heralding the imminent demise of any vaguely creative role, including software engineering.

The long-term impact of new technologies can be difficult to predict and easy to underestimate. This has been summed up nicely by Roy Amara, whose adage about forecasting the effects of technology is known as Amara’s Law, i.e.

We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.

A tipping point may have been reached, but the current hype storm feels similar to the furore that surrounded the internet in the mid-1990s. While it had become clear that the “web” would have an impact on everybody’s lives, it was less obvious that social media would emerge as the internet’s “killer app” and that most people would engage with it through their telephones. On a darker note, it was also difficult to predict that it would be used to disseminate political disinformation on a grand scale, enabling extremists and emboldening conspiracy theorists.

Hype cycles and the perils of prediction

In the increasingly hucksterish environment that surrounds AI it can be easy to be overcome with world-weary cynicism and roll your eyes whenever mention is made of ChatGPT. We’ve been here before, of course, with emerging technologies from blockchain to the metaverse failing to live up to expectations. The risk is that in reacting to this hysteria we may miss emerging opportunities.

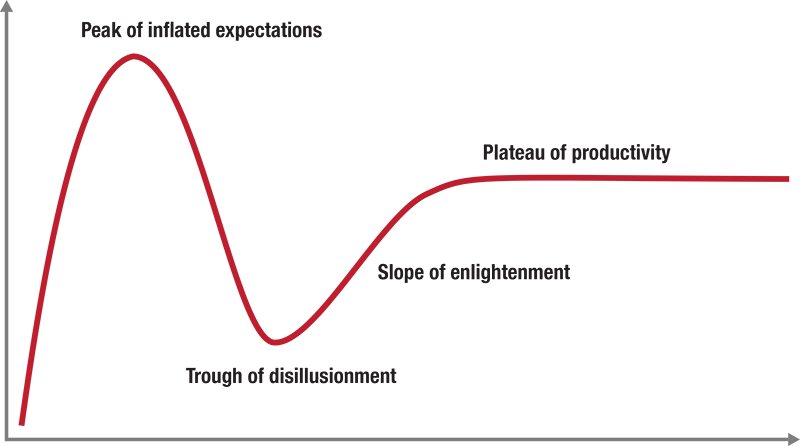

Gartner defined the “hype cycle” to describe the way that people tend to respond to new and emerging technologies. It’s a pattern that should be familiar to anybody who’s been around long enough to see technologies go from initial hype to eventual acceptance.

First comes the “peak of inflated expectation” as excitement spreads around a new technology’s game-changing potential, often beyond the borders of what might be regarded as rational. This early, heady, enthusiasm can rapidly give way to the “trough of disillusionment” when it becomes clear that things aren’t “different this time” after all.

This “boom and bust” can be damaging as it can give rise to botched implementations by misguided early adopters. The disillusionment and disappointment that accompanies any hype hangover can lead to potential value being overlooked. A technology may be discarded for failing to live up to the hype, regardless of the value it can provide.

Eventually, the reputation of a technology recovers and it passes into the “slope of enlightenment“. A more balanced and nuanced understanding of the potential value for the technology starts to come into view, leading to the “plateau of productivity” where it assumes an accepted niche where its capabilities are well understood.

The lesson here is that utilising new technology effectively will inevitably involve some long, hard work. Early enthusiasm should be treated with caution until it can be better tempered by experience. We should also be wary of being too dismissive of a new technology as a reaction to unhelpful initial hype. The challenge is to chart a reasonable course between these two extremes.

Hoping for a softer landing

Where does this leave our large language models? Very much on the “peak of inflated expectation“. Technology often thrives on the new, and an implementation of AI that appears to be accessible to allcomers is worth getting excited about. The wider economic environment also has an influence here. It’s no surprise that an industry suffering from layoffs and consolidation is focusing on large language models as a much-needed source of good news.

The problem is that these models may not live up to some of the hype that’s been generated for them. Tools will emerge that will make us more productive, but they may not usher in the brave new world that is currently being imagined. New jobs will be created, old jobs will become transformed, but technology is likely to extend our capabilities rather than making them redundant.

Meanwhile, we should be wary of the potential ethical and social risks. The regulatory environment tends to lag behind the pace of technology innovation, potentially making us vulnerable to the arbitrary decisions of biased models. The training inputs for large language models represent a copyright violation nightmare. The technology is relatively cheap and could be used to generate disinformation on an industrial scale. If these models are not used responsibly their very plausibility could erode trust in written communication by polluting the internet with nonsense.