There is much discussion on what Generative AI is going to mean for jobs. The best contribution I read during the last couple of months came from Brian Merchant, author of Blood in the Machine (which is the title of his substack as well as the book he wrote about the Luddites). Brian draws insightful comparisons between what happens today and what happened during the early Industrial Revolution.

TL;DR

The fact that Generative AI often produces unreliable, shoddy, results is often presented as an argument that it thus will be unable to disrupt the jobs market. This is probably not true. GenAI doesn’t need to be(come) good to be disruptive. Areas where shoddy work can have value are definitely at risk. And by the way: no, that isn’t coding. Coding needs to be GOOD, IT is far too brittle to be able to work with shoddy code.

This is like at the start of the Industrial Revolution. The early weaving machines produced ‘cheap’ products (in both meanings) and it was the introduction of that ‘cheap’ category that was actually disruptive. Good machines came much much later and definitely not for all areas (which is why we still have sweat shops where our clothes are created, and no machine that folds your laundry, or paints your wall).

But there is a difference. Early machines were separate inventions creating a comparable product. GenAI is trained on the output of humans, their skill is ‘cloned’ and it is this ‘cloned skill’ that produces the ‘comparable product’. GenAI is not ‘copying art’, it is ‘cloning the artisans’. And our intellectual rights haven’t yet caught up.

Having said that, when preparing this piece, I found out ChatGPT really has a sense of humor 🙂

The fact that GenAI results may be shoddy is often used as a critique by people saying “they cannot replace humans”. But what Brian pointed out is that GenAI doesn’t need to be particularly good to replace labour (see here and here). The first machines that replaced artisans produced pretty shoddy stuff too. But it was ‘good enough’ to replace them.

In fact, what the technology opened was the possibility of ‘cheap‘. And that is ‘cheap’ in both meanings. It was this ‘cheap’ that disrupted the labour market, really good machines came much, much later. And this echoes what some companies are doing now, slashing creative stuff like writing and graphics in marketing and replacing it with mostly shoddy, boring, cheap results, we can make a lot of. As a result, wages in those areas are going down, the remaining people have far less enjoyable jobs.

So, what kind of jobs are at risk? Well, jobs where cheap, shoddy work can have real value. So, shoddy graphic art. Shoddy text descriptions of products.

And by the way, people, coding is not such a job! Code must be reliable and GOOD. Information Technology itself is extremely brittle, after all (so ‘GenAI creating better GenAI’, is not a realistic trajectory). If I still was a software engineer, I would not worry about being replaced. I would worry that a few years from now your work in part will consist of having to repair and refactoring lots of brittle, shoddy GenAI-generated code.

I am reminded at what was expected of the Internet in the 1990’s. What people expected was perfect information, education, health, democracy, etc. for all of humanity, all thanks to ‘the internet’. And we got some good stuff, from brilliant educational videos on YouTube to worthwhile blogs, to Wikipedia. But what we also got was ‘cheap’. Lots and lots of it. And we got mental influencing (including disinformation) at a huge scale and social media that naturally not support society but undermine the coherence of it (see my ‘still top dog?’ talk from a few years ago) . And surveillance capitalism. And.

There are reasons enough to — next to a few great things — expect the same ‘cheapness’ and other not-universally-positive effects from GenAI, especially in the creative area (as there, reliability often isn’t much of an issue). Sam Altman, Elon Musk, they are like the craziest of the optimists of the 90’s internet boom who sometimes even predicted that the basic internet would become sentient in a few years. Really, that was a thing then.

Anyway.

But there is also a fundamental difference with the beginning of the Industrial Revolution

Generative AI is trained on (hopefully good/correct/worthwhile) output created by humans. It stores information about the token/pixel patterns of that output in a volume of parameters. With those parameters it can create new patterns based on a combination of the statistical relations in the old patterns and the throwing of the dice. Sometimes there is so much information about a certain data in the parameters that it can actually recreate the training data. When we like that effect it is called ‘memorisation’. When we dislike it it is called ‘data leakage’. But the wanted and unwanted version are one and the same thing. The unwanted version now leads to for instance The New York Times suing OpenAI on a copyright breach when they used the NYT website to train GPT. It is interesting to note that neither NYT nor OpenAI wanted this to happen. But OpenAI was unable to prevent it. OpenAI might be able to find a solution for a few sources such as NYT (e.g. a special model overtrained on a certain source that recognises plagiarism), but it is hard to see how they can completely solve this.

But there is a different version of this problem that isn’t based on copyright but on trademark. See this example (from that previous post on memorisation):

None of the images are original art. There is technically no copyright breach here. But you immediately know that there is a problem. No way Disney will let you generate your own Pixar-style animation or Donald Duck stories. And there are many more examples. For the most popular styles there now may be band-aids, but the problem runs much deeper.

I realised recently that the kind of shift here is not so much copying creations but ‘copying’ (‘cloning’) creators. By using the creations, GenAI is ‘sort-of-cloning the (collective) creator(s)’ without consent. This — while innovative — is also a form of theft, as none of these creators gave permission to be ‘sort-of-cloned’ that way.

Our intellectual property rights were not designed for what GenAI does. Even if your work is not copied, your creativity, your style, etc. — in a ‘cheap’ way — is. This is indeed a bit like what happened at the start of the Industrial Revolution. But… the big difference is that weaving (pottery etc.) machines were independent inventions (the machines might have been based on understanding the principles of the art, but they were not ‘trained’ on the actual output of the artisans.

What we might therefore need is an extension of ‘intellectual property rights’ to ‘intellectuality rights‘ (bypassing the potential solution to state that you ‘own yourself’ — but maybe that is even an effective shortcut).

Now, GenAI is trained on a large collection of ‘the output of artisan skill’ and it depends on the complex interplay of training data, parameter volume (more on that another time) how much of an individual skill is getting ‘cloned’. The goal of the GenAI people is not have recognisable ‘cloning’ at all, they want to use all those skills to make an ‘independent skilled agent’. And let’s be fair: humans are mostly copying machines too. The whole enterprise rests on that assumption, namely that the technique in the end is — like with humans learning — resulting in independent ‘intelligence’. Assessments In how far that is attainable vary. We might as well get some sort of shoddy average approximation without actual intelligence (e.g. understanding your own output — see below)

Anyway, legally, none of this will be easy. Years of popcorn, legal careers and all that.

Post Scriptum

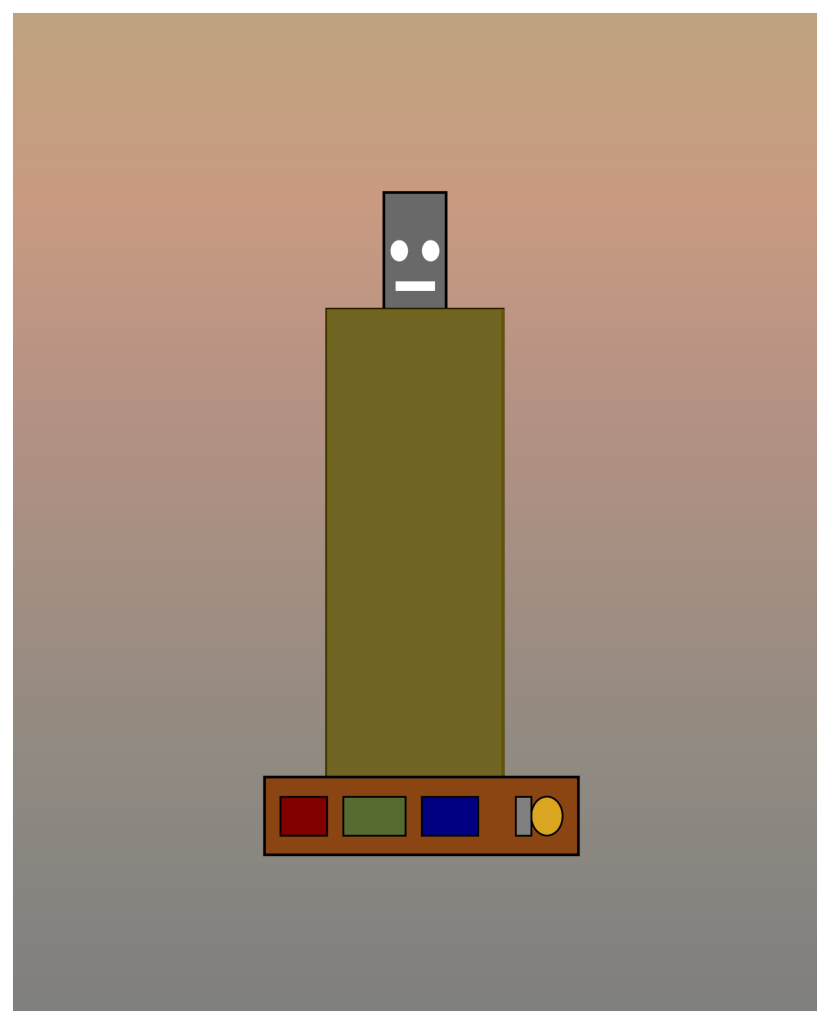

I generally do not use GenAI to create my visuals. I find the overabundance of GenAI art (e.g. on the last conference I went to) quite boring and irritating. But in this case, I wanted an illustration that shows the ‘cloning of the artist, not the artwork’. So I went to ChatGPT to create an example (assuming it was multimodal these days). To prevent running into a filter, I wanted something in the style of Dutch Golden Age painter Rembrandt van Rijn. So, I asked it

GW: Can you paint me a robot in the style of Rembrandt?

and ChatGPT replied:

To create a painting of a robot in the style of Rembrandt, I’ll combine elements typical of Rembrandt’s work, such as his use of dramatic lighting, rich color palette, and intricate detail, with the futuristic and mechanical aspects of a robot. Here’s a detailed description of the imagined painting:

Title: “The Mechanized Philosopher”

Composition:

• The robot stands or sits in a dark, richly furnished room reminiscent of Rembrandt’s interiors, with deep shadows and warm, golden lighting highlighting its form.

• The robot’s design incorporates both human and mechanical features, with a humanoid shape but visible joints, gears, and metallic surfaces.

• The background includes classic elements such as heavy drapery, a wooden table with an assortment of books and scientific instruments, and a dimly lit window with soft daylight filtering through.

Lighting:

• A strong light source from the side, casting dramatic shadows and creating a chiaroscuro effect, one of Rembrandt’s signature techniques.

• Highlights gleaming on the metallic surfaces of the robot, contrasting with the dark background.

Color Palette:

• Dominant use of earthy tones like browns, golds, and deep reds, interspersed with the cool metallic hues of the robot’s body.

• Subtle use of blues and greens to add depth to the shadows and reflections on the robot’s surface.

Details:

• The robot’s face shows a mixture of human-like features and mechanical precision, with expressive eyes that reflect a sense of wisdom and contemplation.

• Intricate detailing on the robot’s body, with visible wires, gears, and panels, painted with meticulous attention to texture and light.

Mood:

• The overall mood is one of introspection and quiet dignity, evoking the timeless, contemplative quality of Rembrandt’s portraits.

I see what ChatGPT is doing here. It is creating a prompt to hand to the drawing model. And indeed it continued with

Now, let me create a visual representation of this description for you.

With such a good prompt, I expected a great result. This is what I got:

Here is an artistic representation of “The Mechanized Philosopher” – a robot in the style of Rembrandt. The painting features:

• A dark, richly textured background with dramatic lighting and shadows, characteristic of Rembrandt’s work.

• A robot with human-like features and intricate mechanical details, bathed in a warm, golden light.

• A table with books and scientific instruments, adding to the scholarly and contemplative atmosphere of the scene.

This combination of elements aims to evoke the timeless, introspective quality of Rembrandt’s portraits while incorporating the futuristic essence of a robot.

I must say, ChatGPT has acquired a real sense of humour. Or it is a nice example of completely not understanding your own output.

(It’s the output of python code using matplotlib and numpy)

So, I took ChatGPT’s nice prompt and gave it to another engine (don’t recall which one) and it produced the one on the right at the top of the page. Of course, zoom in and you’ll notice GenAI’s ‘cheap’ (but fair: both the robot and the man in the painting in the background have weird hands):

It’s shoddy. It’s ugly. It’s cheap. But it does the job. And that is what’s going to be exploited. We can count on it.

If there is enough to be exploited to recoup the enormous amount of investments is doubtful (as is now being suggested by Goldman Sachs, MSN, Sequoia, and others). We may well see a repeat of the dotcom bust that followed the internet hype of the1990s. After that bust, the internet lived on happily with the remaining big players, finding its own role in society, the brilliant, the shoddy and the damaging. The economic fixes applied when the dotcom bubble burst led (indirectly) to the crash of 2008, though.

How much of real value (revenue for the GenAI firms) is left after the bubble bursts, is a trillion dollar question. How much damage the end of the bubble is going to do is a multi-trillion dollar question. So, watch out for tech oligarchs doing everything they can to extend the bubble (e.g. by lobbying). But I digress. Again!.

This article is part of the ChatGPT and Friends Collection. In case you found any term or phrase here unclear or confusing (e.g, I can understand that most people do not immediately know what a ‘token’ is (and it is relevant to understand that), that ‘context’ in LLMs is whatever has gone before in human (prompt) and LLM (reply) generated text, before producing the next token), you can probably find a clear explanation there.

[You do not have my permission to use any content on this site for training a Generative AI (or any comparable use), unless you can guarantee your system never misrepresents my content and provides a proper reference (URL) to the original in its output. If you want to use it in any other way, you need my explicit permission]